Following the surprising success of ChatGPT, the rise of large models for images and videos, and billions being poured into 'foundation models', there is one particular question on everyone's mind: "Will training a large model on an even larger dataset give us general-purpose robots?".

Now before we go launch into the debate, let's take a rain check on that 'large dataset'. Do we have it? OpenAI is definitely interested in such data. So are Meta, Google and X.ai.

A big reason why LLM’s have seen so much excitement and adoption is because they’r extremely easy to use: especially by non-experts. One doesn’t have to know about the details of training an LLM, or perform any tough setup, to prompt and use these models for their own tasks. Most robot learning approaches are currently far from this. They often require significant knowledge of their inner workings to use, and involve very significant amouns of setup. Perhaps thinking more about how to make robot learning systems easier to use and widely applicable could help improve adoption and potentially scalability of these approaches.

- Sergey Levine

LLMs had a good thing going for them. These fellas didn't need to think about sensory inputs coupled with action pairs or actuator values. The internet is full of text, images, and videos. So, how can we leverage that for robotics?

Let's think step by step... ha!

Data Augmentation

Now data augmentation is not a new kid on block but it went through same evolution as that of Lara Croft in games graphics. Where we went from random croping, zoom-in/out to, adding fake gaussian rain and sun to craftily managing every pixel in the image with GenAI to give us a completely new set of data.

We went from this ->

To this ->

Standardised Mapping across Different Embodiments

We don't really have a single standard robot, but hundreds of different robots with a very different set of possible actuator values. So even if you found some way to collect the data for one particular robot, that doesn't give you much leverage in scaling it for other types of robots. The actuation values for a Tesla Optimus are very different from those of Boston Dynamics' Spot and a standalone robotic arm. However, the team at Open X-embodiment did an awesome job in standardizing and tokenizing the action values across robots with a variety of brands, shapes, and forms. So, there is still hope for Gondor...

Simulations

Domain Randomisation: Most of the early success of sim-to-real came from domain randomisation. OpenAI's Rubik's cube-solving shadow hand is a good example of it.

AI algorithms are lazy and tend to overfit to the easiest pattern. Hence, to avoid AI memorizing the simulation and not working in the real world, you randomize everything in the domain that you don't want AI to overfit.

In simple words, instead of one good sim, you need 100 bad sims.

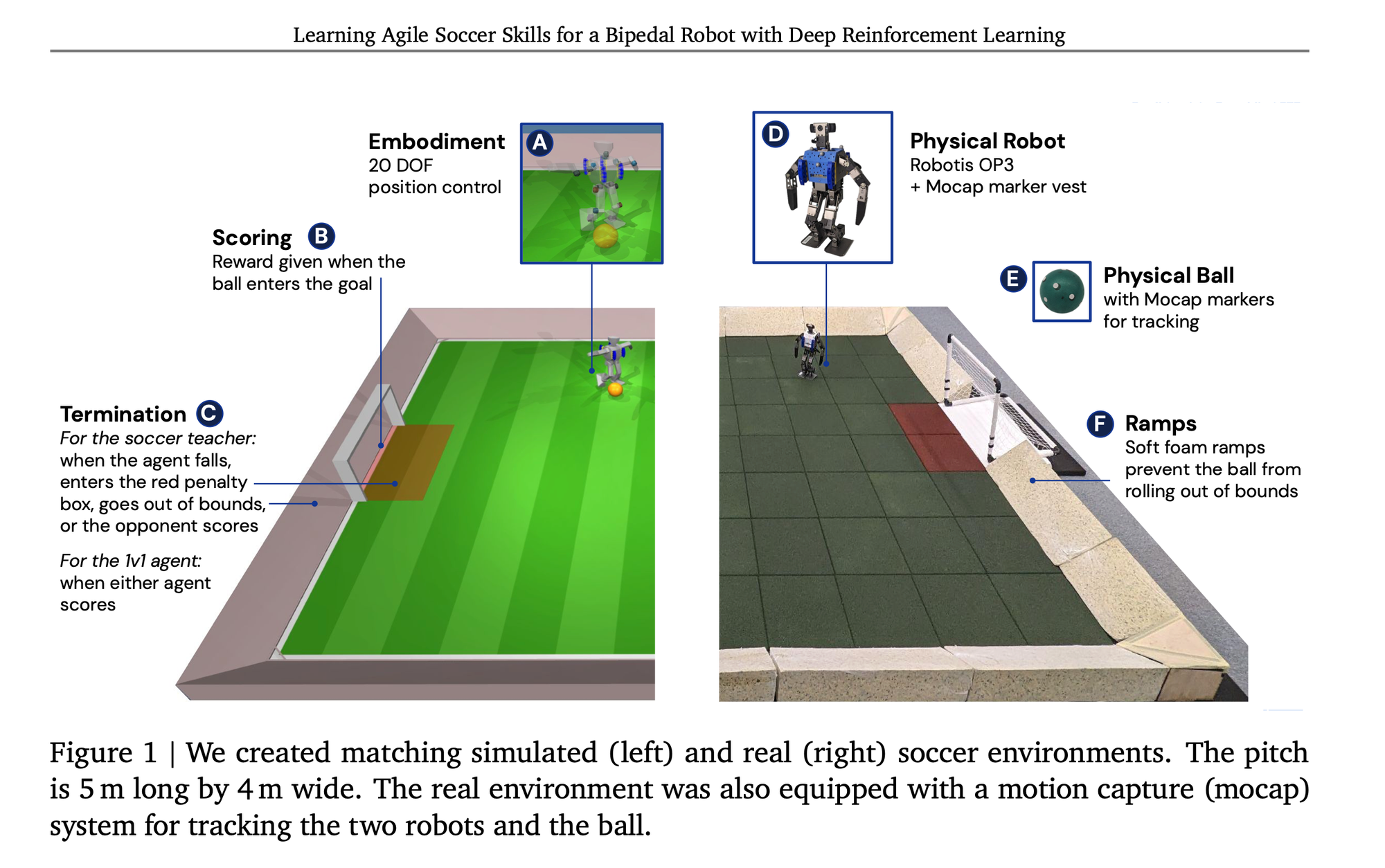

NeRF: Now, domain randomization was solving the problem of not being able to imitate reality. But with NeRFs, you can actually imitate reality, which is exactly what Deepmind did with their bipedal robots.

They created a sim based on Mujoco engine using NeRF. In addition, they randomized training over NeRF models captured at different points in time to be robust to changes in the background and lighting conditions. Now, that is the next level of data augmentation there.

And this is just the beginning. We are seeing LLM powered sims that automatically create new tasks, expert policies to train, and use 3D generation models to create realistic and diverse environments for training. There are attempts at using simulation to generating 100x more data from human demonstrations. Combining GenSim and MimicGen gives us very powerful tools for scaling the robotics datasets. Combine that with NeRF, and you have a powerful simulation platform for training the next generation of autonomous robots.

In the following articles, let's dive deep into some of the papers, data augmentation, and simulations for end-to-end robots.

And for now, let's hallucinate...

Ridiculous Ideas

Use the DIY data on YouTube:

There are numerous high-quality videos on YouTube, where you have a fixed camera position and the person in the video is live-narrating every frame. Using pose-detection models and friends, you can get the trajectory of hands and arms. Also, infer the opening and closing of hands, which then you maybe can tokenize and map to robotic arms. However, we are stuck in image coordinates, and whoever finds a way to get to 3D coordinates deserves a cookie here.

GenAI can help. Similar to GenSim, if we can create a system that takes the videos, recreates the assets, and creates a 3D sim with a thousand more datapoints similar to MimicGen, we immediately have a lot more data to train.

Adept AI, (and very likely Mistral) who recently released Fuyu-8B a multi-modal model, is seemingly training a model on things like YouTube tutorials.

AR, Pendants and Pins:

Mixed reality devices like Apple Vision Pro and Meta Quest have a spatial understanding of the surroundings and can track your hands in 3D space. And as soon as data starts flowing in from AR glasses and wearable devices, you will now have a very unique ego-view data, which can, in theory, even lead to the emergence of identity and embodied agents. There is even a Schmidhuber paper on the topic.

Can't find the paper, but the paper discusses the concept of self-modeling robots, which is a key step towards creating robots that can understand parts of themselves, such as their arm, as being part of their own entity.

Even more Ridiculous Idea

Tele-operated kitchen robots:

Who doesn't want a personal chef who also cleans up after cooking? Now, there are a few startups working on robotic kitchens, but it is going to take another 5 to 10 years to have robots in everyday kitchens, so... But people spend $30K to $100K on nice kitchens. What if it comes with two robotic arms attached to it? It cooks and cleans up for you when you are away or sleeping. You don't care if they are autonomous or teleoperated. If you can have a teleoperated car, you can have a teleoperated robot. This would be quite handy for elderly and senior homes. Not to mention, a teleoperated kitchen robot will give you a lot of high-quality data and the perks of shadow learning.

Conclusion

Well it's time to get creative with building the dataset for next generation of robots. LLMs and GenAI are powerful tech, and it will be shame if cannot leverage it to push the boundaries of robotics and automation.