I recently watched “State of GPT” by Karpathy. I always enjoy his talks, especially those he delivered during his tenure at Tesla. Each time I watched one, it felt as if he was offering a peek into information that wasn’t publicly available — a sort of secret sauce. He consistently shared insights about Tesla’s autopilot DL architectures, data strategies, and more. Unfortunately, he refrained from doing so this time, which, while not surprising considering OpenAI’s “commitment to openness”, is somewhat disappointing.

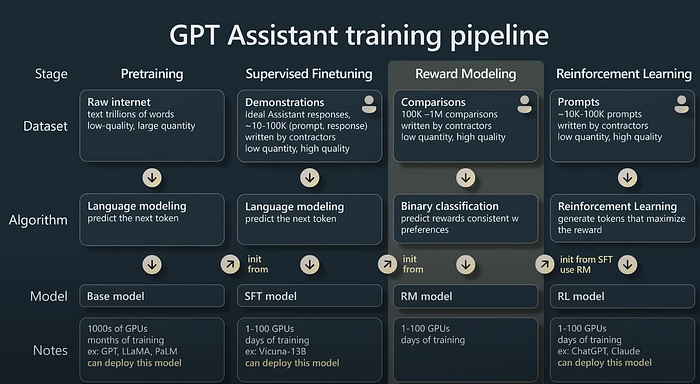

The key takeaway from this talk is understanding the four stages of training a GPT-4-like model:

- Base: Pretraining models on a vast amount of low-quality, high-quantity internet data.

- Supervised Fine-tuning (SFT): Fine-tuning base models on ideal assistant responses.

- Reward Modeling: Training a model to compare good and bad outputs (trained on pairs of good and bad content)

- Reinforcement Learning: Training a model to maximize the reward (RM model)

This understanding is crucial to developing an intuition about what works and why.

Interested in learning more without watching it? here are my key takeaways from this talk:

- 99% of computing power goes towards pre-training (low quality, trillions of words, low quality).

- Context length is the maximum number of tokens a language model will consider when predicting the next one.

- Base models are not assistants, and prompting them to behave as such can be tricky and unreliable.

- Supervised fine-tuning involves generating ideal responses to questions, leading to detailed answers by professional contractors under very specific labeling instructions.

- Reward models evaluate how suitable a completion is for a given prompt.

- The language modeling objective is adjusted according to the output from the reward model (this is fixed after its initial training phase).

- Outputs from RLHF-trained models are preferred by humans. Additionally, humans find it easier to compare/discriminate than to generate (for example, a haiku generation task).

- According to Karpathy, in a scenario where you have N things and need more, base models might be better suited.

- Models need “tokens to think” — to break things up and initiate an internal monologue.

- Asking for reflection or self-criticism can sometimes lead the model to recognize that what they produced didn’t meet the criteria, but only after the fact!

- Language models don’t have an inner monologue (like humans do) — this is an important factor to consider when assigning tasks to them.

- Karpathy employs the System One and System Two analogy to LLMs (Thinking, Fast and Slow Book by Daniel Kahneman)

- “Guidance” by Microsoft is a framework/prompting language that allows for constrained generations.

- LoRA facilitates fine-tuning of language models without breaking the bank, on consumer hardware.